Fine-tuning LlaMa 2

Meta LlaMa 2 is an open-source large language model trained on publicly available data on the internet.

Parameter Size: 7 billion to 70 billion.

Architecture: Transformer architecture with certain modifications on context length, use of grouped-query attention, rotary positional embeddings, and so on. (Refer to this paper for training details).

While a full fine-tune LlaMa 2 is possible, this guide will use concepts such as PEFT (Parameter Efficient Fine-Tuning) and LoRA to more efficeintly fine-tune LlaMa 2. These approaches consider trade-offs in cost and computational resources, aiming to achieve optimal performance with reduced resource requirements.

Before we get started, you will need a GPU node to fine-tune LlaMa 2. You can use brev.dev to get GPU nodes. The code in this guide was developed using 1 NVIDIA A100 80GB GPU on the NYU HPC Cluster. But you can use any GPU type (RTX8000, V100, A10,..) with >= 28 GB memory.

Dataset

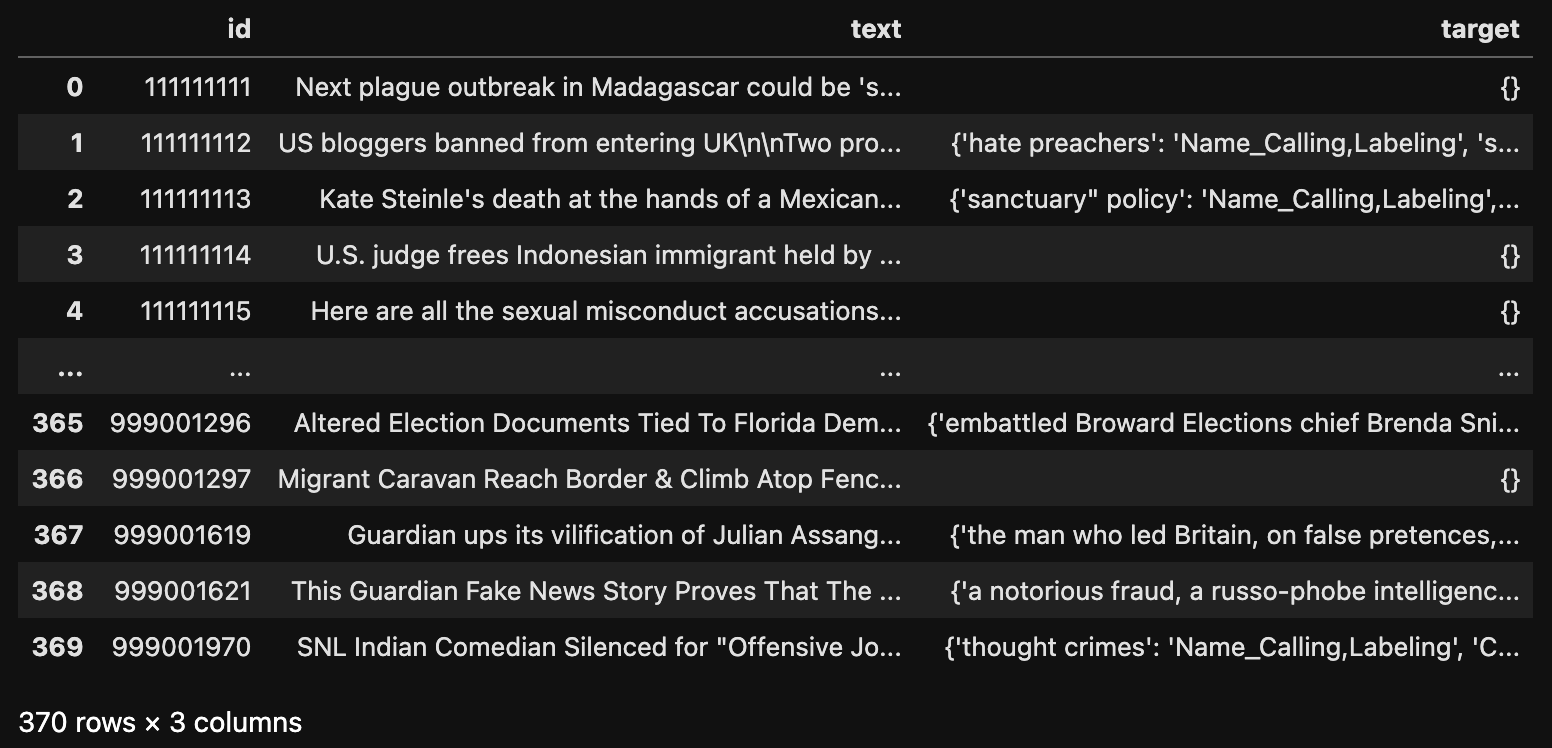

The code in this guide uses the Propaganda Techniques Corpus (PTC) dataset to fine-tune LlaMa 2 to detect propaganda techniques in news articles. PTC contains phrase-level annotations of propaganda techniques (eg, name-calling, loaded language, flag-waving, etc) in news articles.

Download PTC from here.

We need a pandas dataframe object with each row representing a news article and the corresponding phrase-technique instances in it. For the scope of this guide, we will focus on one technique at a time (this code can be modified to work with multiple technqiues (multi-label multi-class classification setting)).

Next, we will turn this pandas dataframe into a huggingface dataset.Dataset object.

finetune_train_dataset = Dataset.from_pandas(train_df)

finetune_dev_dataset = Dataset.from_pandas(dev_df)

Model

Next up, we load the model (with 4-bit quanitzation) using BitsAndBytes.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig

from accelerate import FullyShardedDataParallelPlugin, Accelerator

base_model_id = "meta-llama/Llama-2-7b-hf"

quant_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16, # torch.bfloat16 if A100, use getattr(torch, "float16") for RTX8000 or V100

bnb_4bit_use_double_quant=True,

)

model = AutoModelForCausalLM.from_pretrained(base_model_id, quantization_config=quant_config,device_map={"": Accelerator().local_process_index})

Load tokenizer with left padding to get our training data points to be of the same length.

tokenizer = AutoTokenizer.from_pretrained(

base_model_id,

padding_side="left",

add_eos_token=True,

add_bos_token=True,

)

tokenizer.pad_token = tokenizer.eos_token

Let’s use the following function to add padding (max length should be determined based on your dataset’s length).

max_length = 10000

def tokenize(prompt):

result = tokenizer(

prompt,

truncation=True,

max_length=max_length,

padding="max_length",

)

result["labels"] = result["input_ids"].copy()

return result

We will frame the entity recognition problem using the following prompt template.

def generate_and_tokenize_prompt(data_point):

full_prompt =f"""I want you to perform a data annotation task. In your output, I want you to return a json dictionary with key as phrase and value as technique, depending on whether you think the phrases in the following text uses name-callling.

A phrase is "name-calling" if you perceive that it is "Labeling the object of the propaganda campaign as either something the target audience fears, hates, finds undesirable or otherwise loves or praises".

I want you to respond with a json dictionary strictly having the detected phrases as keys and technique (Name-Calling) as value.

### Text:

{data_point["text"]}

### Output:

{data_point["target"]}

"""

return tokenize(full_prompt)

Let’s obtain the tokenized dataset.

tokenized_train_dataset = finetune_train_dataset.map(generate_and_tokenize_prompt)

tokenized_val_dataset = finetune_dev_dataset.map(generate_and_tokenize_prompt)

Fine-tuning the model using LoRA

The following code will process the quantized model for training.

from peft import prepare_model_for_kbit_training

model.gradient_checkpointing_enable()

model = prepare_model_for_kbit_training(model)

Next up, we define our QLoRA configuration. The values were selected using this example as reference.

from peft import LoraConfig, get_peft_model

config = LoraConfig(

r=8,

lora_alpha=32,

target_modules=[ # the linear layers that are now trainable

"q_proj",

"k_proj",

"v_proj",

"o_proj",

"gate_proj",

"up_proj",

"down_proj",

"lm_head",

],

bias="none",

lora_dropout=0.05, # Conventional

task_type="CAUSAL_LM",

)

model = get_peft_model(model, config)

OPTIONAL: (Use Fully Sharded Data Parallel to make training large models faster).

from accelerate import FullyShardedDataParallelPlugin, Accelerator

from torch.distributed.fsdp.fully_sharded_data_parallel import FullOptimStateDictConfig, FullStateDictConfig

fsdp_plugin = FullyShardedDataParallelPlugin(

state_dict_config=FullStateDictConfig(offload_to_cpu=True, rank0_only=False),

optim_state_dict_config=FullOptimStateDictConfig(offload_to_cpu=True, rank0_only=False),

)

accelerator = Accelerator(fsdp_plugin=fsdp_plugin)

model = accelerator.prepare_model(model)

Use weights and biases to track your experiment (& metrics)

!pip install -q wandb -U

import wandb, os

wandb.login()

wandb_project = "propaganda-finetune"

if len(wandb_project) > 0:

os.environ["WANDB_PROJECT"] = wandb_project

Finally, start the fine-tuning job:

import transformers

project = "propaganda-finetune"

base_model_name = "llama2-7b"

run_name = base_model_name + "-" + project

output_dir = "./" + run_name

tokenizer.pad_token = tokenizer.eos_token

trainer = transformers.Trainer(

model=model,

train_dataset=tokenized_train_dataset,

eval_dataset=tokenized_val_dataset,

args=transformers.TrainingArguments(

output_dir=output_dir,

gradient_accumulation_steps=1,

per_device_train_batch_size = 1, # reduce this to avoid OOM errors

num_train_epochs = 3,

optim = "paged_adamw_32bit", # QLoRA uses paged adamw optimizer

lr_scheduler_type = "cosine",

learning_rate = 0.0002,

bf16 = True, # set to True on A100; set to false on RTX8000, V100

# fp16 = True, # set to true on RTX8000, V100

gradient_checkpointing=True,

logging_steps = 1, # log at each step

logging_dir="./logs",

warmup_steps = 5,

weight_decay=0.1,

save_strategy="steps", # save the model checkpoint every logging step

save_steps=3, # save checkpoints at every third step

evaluation_strategy="steps",

eval_steps=3, # Evaluate at every third step

do_eval=True,

report_to="wandb",

run_name=f"{run_name}-{datetime.now().strftime('%Y-%m-%d-%H-%M')}" ),

data_collator=transformers.DataCollatorForLanguageModeling(tokenizer, mlm=False),

)

model.config.use_cache = False

model.config.pretraining_tp = 1

trainer_stats = trainer.train()

Inference

Restart your kernel and run the following cells for inference.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig

base_model_id = "meta-llama/Llama-2-7b-hf"

quant_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16,

bnb_4bit_use_double_quant=True,

)

base_model = AutoModelForCausalLM.from_pretrained(

base_model_id, # Llama 2 7b, same as before

quantization_config=quant_config, # Same quantization config as before

device_map={"": Accelerator().local_process_index},

trust_remote_code=True,

)

eval_tokenizer = AutoTokenizer.from_pretrained(

base_model_id,

add_bos_token=True,

trust_remote_code=True,

)

Load the QLoRA model from a desired checkpoint.

from peft import PeftModel

ft_model = PeftModel.from_pretrained(base_model, "llama2-7b-propaganda-finetune/checkpoint-371")

Run inference on a test article.

eval_prompt = f"""I want you to perform a data annotation task. In your output, I want you to return a json dictionary with key as phrase and value as technique, depending on whether you think the phrases in the following text uses loaded language.

A phrase is "loaded language" if you perceive that it is "Using words or phrases with strong emotional implications to influence an audience".

I want you to respond with a json dictionary strictly having the detected phrases as keys and technique (Loaded Language) as value.

### Text:

{test_news_article}

### Output:

"""

model_input = eval_tokenizer(eval_prompt, return_tensors="pt").to("cuda")

with torch.no_grad():

output = eval_tokenizer.decode(ft_model.generate(**model_input, max_new_tokens=500,repetition_penalty=1.5, top_p=0.1,top_k=20)[0], skip_special_tokens=False)

new_tokens = output.replace(eval_prompt, "")